AI Adoption in the Enterprise 2022 – O’Reilly

[ad_1]

In December 2021 and January 2022, we asked recipients of our Data and AI Newsletters to participate in our annual survey on AI adoption. We were particularly interested in what, if anything, has changed since last year. Are companies farther along in AI adoption? Do they have working applications in production? Are they using tools like AutoML to generate models, and other tools to streamline AI deployment? We also wanted to get a sense of where AI is headed. The hype has clearly moved on to blockchains and NFTs. AI is in the news often enough, but the steady drumbeat of new advances and techniques has gotten a lot quieter.

Compared to last year, significantly fewer people responded. That’s probably a result of timing. This year’s survey ran during the holiday season (December 8, 2021, to January 19, 2022, though we received very few responses in the new year); last year’s ran from January 27, 2021, to February 12, 2021. Pandemic or not, holiday schedules no doubt limited the number of respondents.

Our results held a bigger surprise, though. The smaller number of respondents notwithstanding, the results were surprisingly similar to 2021. Furthermore, if you go back another year, the 2021 results were themselves surprisingly similar to 2020. Has that little changed in the application of AI to enterprise problems? Perhaps. We considered the possibility that the same individuals responded in both 2021 and 2022. That wouldn’t be surprising, since both surveys were publicized through our mailing lists—and some people like responding to surveys. But that wasn’t the case. At the end of the survey, we asked respondents for their email address. Among those who provided an address, there was only a 10% overlap between the two years.

When nothing changes, there’s room for concern: we certainly aren’t in an “up and to the right” space. But is that just an artifact of the hype cycle? After all, regardless of any technology’s long-term value or importance, it can only receive outsized media attention for a limited time. Or are there deeper issues gnawing at the foundations of AI adoption?

AI Adoption

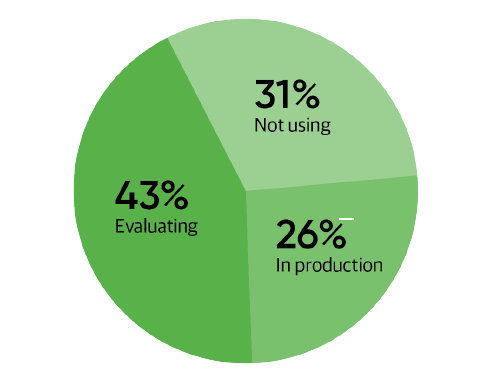

We asked participants about the level of AI adoption in their organization. We structured the responses to that question differently from prior years, in which we offered four responses: not using AI, considering AI, evaluating AI, and having AI projects in production (which we called “mature”). This year we combined “evaluating AI” and “considering AI”; we thought that the difference between “evaluating” and “considering” was poorly defined at best, and if we didn’t know what it meant, our respondents didn’t either. We kept the question about projects in production, and we’ll use the words “in production” rather than “mature practice” to talk about this year’s results.

Despite the change in the question, the responses were surprisingly similar to last year’s. The same percentage of respondents said that their organizations had AI projects in production (26%). Significantly more said that they weren’t using AI: that went from 13% in 2021 to 31% in this year’s survey. It’s not clear what that shift means. It’s possible that it’s just a reaction to the change in the answers; perhaps respondents who were “considering” AI thought “considering really means that we’re not using it.” It’s also possible that AI is just becoming part of the toolkit, something developers use without thinking twice. Marketers use the term AI; software developers tend to say machine learning. To the customer, what’s important isn’t how the product works but what it does. There’s already a lot of AI embedded into products that we never think about.

From that standpoint, many companies with AI in production don’t have a single AI specialist or developer. Anyone using Google, Facebook, or Amazon (and, I presume, most of their competitors) for advertising is using AI. AI as a service includes AI packaged in ways that may not look at all like neural networks or deep learning. If you install a smart customer service product that uses GPT-3, you’ll never see a hyperparameter to tune—but you have deployed an AI application. We don’t expect respondents to say that they have “AI applications deployed” if their company has an advertising relationship with Google, but AI is there, and it’s real, even if it’s invisible.

Are those invisible applications the reason for the shift? Is AI disappearing into the walls, like our plumbing (and, for that matter, our computer networks)? We’ll have reason to think about that throughout this report.

Regardless, at least in some quarters, attitudes seem to be solidifying against AI, and that could be a sign that we’re approaching another “AI winter.” We don’t think so, given that the number of respondents who report AI in production is steady and up slightly. However, it is a sign that AI has passed to the next stage of the hype cycle. When expectations about what AI can deliver are at their peak, everyone says they’re doing it, whether or not they really are. And once you hit the trough, no one says they’re using it, even though they now are.

The trailing edge of the hype cycle has important consequences for the practice of AI. When it was in the news every day, AI didn’t really have to prove its value; it was enough to be interesting. But once the hype has died down, AI has to show its value in production, in real applications: it’s time for it to prove that it can deliver real business value, whether that’s cost savings, increased productivity, or more customers. That will no doubt require better tools for collaboration between AI systems and consumers, better methods for training AI models, and better governance for data and AI systems.

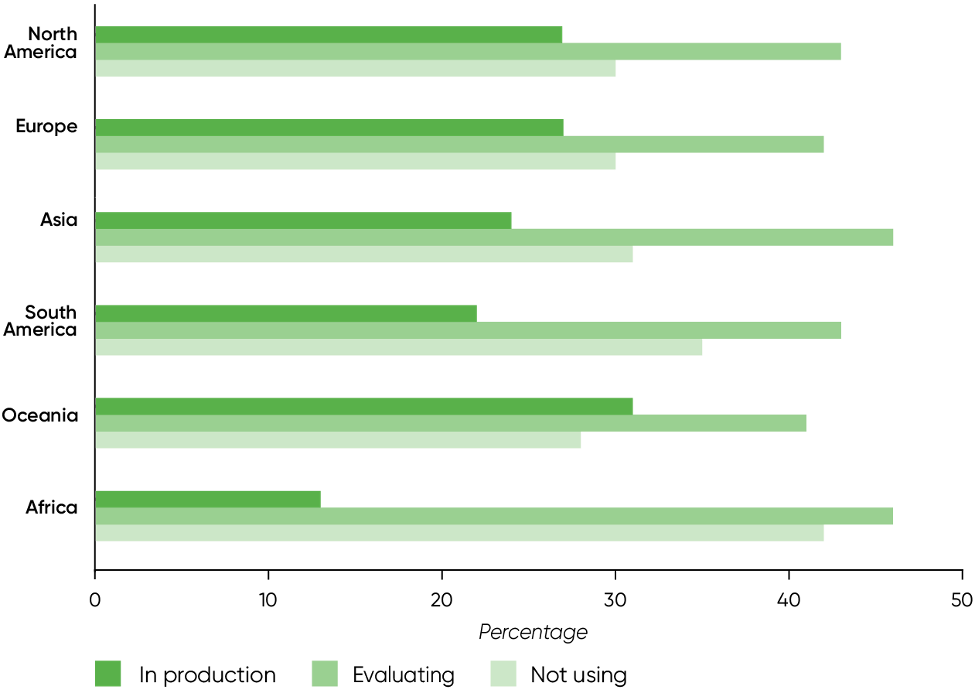

Adoption by Continent

When we looked at responses by geography, we didn’t see much change since last year. The greatest increase in the percentage of respondents with AI in production was in Oceania (from 18% to 31%), but that was a relatively small segment of the total number of respondents (only 3.5%)—and when there are few respondents, a small change in the numbers can produce a large change in the apparent percentages. For the other continents, the percentage of respondents with AI in production agreed within 2%.

After Oceania, North America and Europe had the greatest percentages of respondents with AI in production (both 27%), followed by Asia and South America (24% and 22%, respectively). Africa had the smallest percentage of respondents with AI in production (13%) and the largest percentage of nonusers (42%). However, as with Oceania, the number of respondents from Africa was small, so it’s hard to put too much credence in these percentages. We continue to hear exciting stories about AI in Africa, many of which demonstrate creative thinking that is sadly lacking in the VC-frenzied markets of North America, Europe, and Asia.

Adoption by Industry

The distribution of respondents by industry was almost the same as last year. The largest percentages of respondents were from the computer hardware and financial services industries (both about 15%, though computer hardware had a slight edge), education (11%), and healthcare (9%). Many respondents reported their industry as “Other,” which was the third most common answer. Unfortunately, this vague category isn’t very helpful, since it featured industries ranging from academia to wholesale, and included some exotica like drones and surveillance—intriguing but hard to draw conclusions from based on one or two responses. (Besides, if you’re working on surveillance, are you really going to tell people?) There were well over 100 unique responses, many of which overlapped with the industry sectors that we listed.

We see a more interesting story when we look at the maturity of AI practices in these industries. The retail and financial services industries had the greatest percentages of respondents reporting AI applications in production (37% and 35%, respectively). These sectors also had the fewest respondents reporting that they weren’t using AI (26% and 22%). That makes a lot of intuitive sense: just about all retailers have established an online presence, and part of that presence is making product recommendations, a classic AI application. Most retailers using online advertising services rely heavily on AI, even if they don’t consider using a service like Google “AI in production.” AI is certainly there, and it’s driving revenue, whether or not they’re aware of it. Similarly, financial services companies were early adopters of AI: automated check reading was one of the first enterprise AI applications, dating to well before the current surge in AI interest.

Education and government were the two sectors with the fewest respondents reporting AI projects in production (9% for both). Both sectors had many respondents reporting that they were evaluating the use of AI (46% and 50%). These two sectors also had the largest percentage of respondents reporting that they weren’t using AI. These are industries where appropriate use of AI could be very important, but they’re also areas in which a lot of damage could be done by inappropriate AI systems. And, frankly, they’re both areas that are plagued by outdated IT infrastructure. Therefore, it’s not surprising that we see a lot of people evaluating AI—but also not surprising that relatively few projects have made it into production.

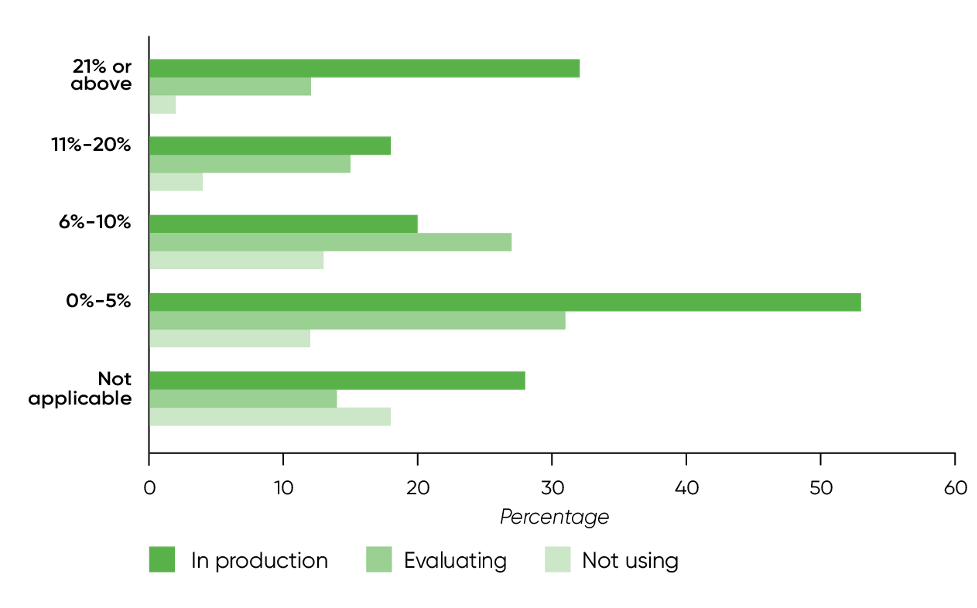

As you’d expect, respondents from companies with AI in production reported that a larger portion of their IT budget was spent on AI than did respondents from companies that were evaluating or not using AI. 32% of respondents with AI in production reported that their companies spent over 21% of their IT budget on AI (18% reported that 11%–20% of the IT budget went to AI; 20% reported 6%–10%). Only 12% of respondents who were evaluating AI reported that their companies were spending over 21% of the IT budget on AI projects. Most of the respondents who were evaluating AI came from organizations that were spending under 5% of their IT budget on AI (31%); in most cases, “evaluating” means a relatively small commitment. (And remember that roughly half of all respondents were in the “evaluating” group.)

The big surprise was among respondents who reported that their companies weren’t using AI. You’d expect their IT expense to be zero, and indeed, over half of the respondents (53%) selected 0%–5%; we’ll assume that means 0. Another 28% checked “Not applicable,” also a reasonable response for a company that isn’t investing in AI. But a measurable number had other answers, including 2% (10 respondents) who indicated that their organizations were spending over 21% of their IT budgets on AI projects. 13% of the respondents not using AI indicated that their companies were spending 6%–10% on AI, and 4% of that group estimated AI expenses in the 11%–20% range. So even when our respondents report that their organizations aren’t using AI, we find that they’re doing something: experimenting, considering, or otherwise “kicking the tires.” Will these organizations move toward adoption in the coming years? That’s anyone’s guess, but AI may be penetrating organizations that are on the back side of the adoption curve (the so-called “late majority”).

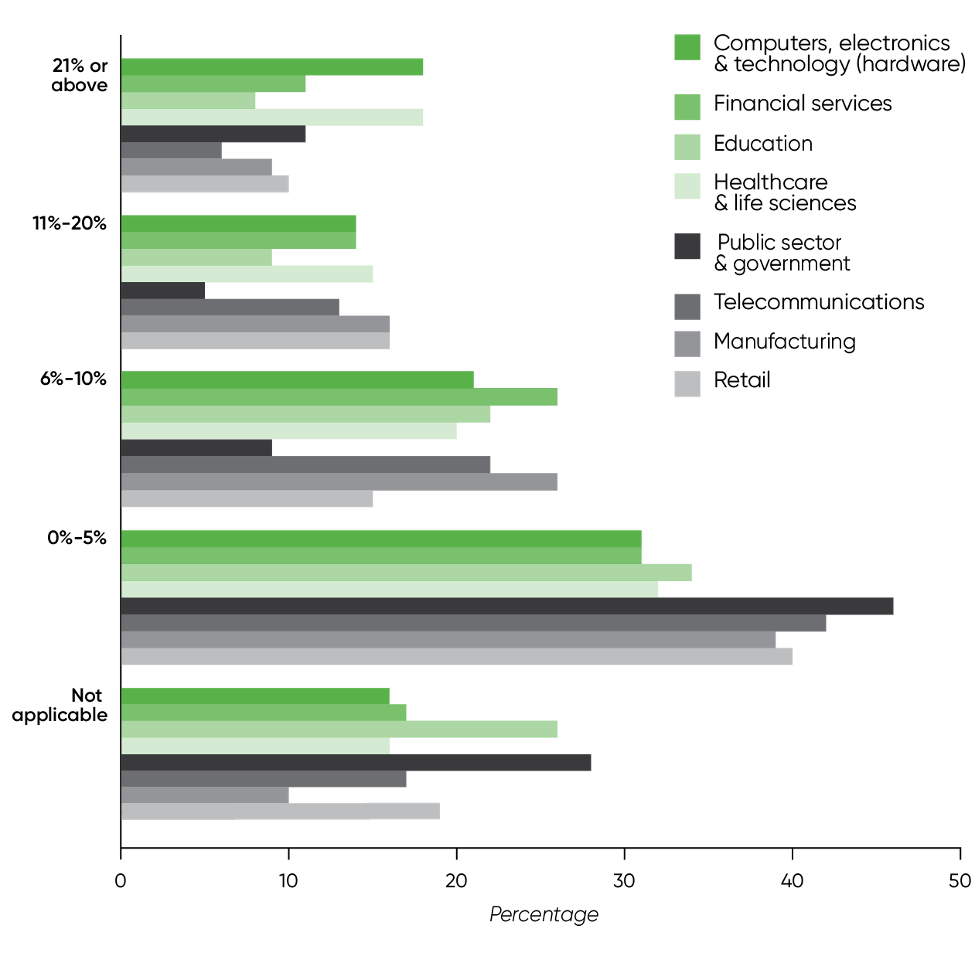

Now look at the graph showing the percentage of IT budget spent on AI by industry. Just eyeballing this graph shows that most companies are in the 0%–5% range. But it’s more interesting to look at what industries are, and aren’t, investing in AI. Computers and healthcare have the most respondents saying that over 21% of the budget is spent on AI. Government, telecommunications, manufacturing, and retail are the sectors where respondents report the smallest (0%–5%) expense on AI. We’re surprised at the number of respondents from retail who report low IT spending on AI, given that the retail sector also had a high percentage of practices with AI in production. We don’t have an explanation for this, aside from saying that any study is bound to expose some anomalies.

Bottlenecks

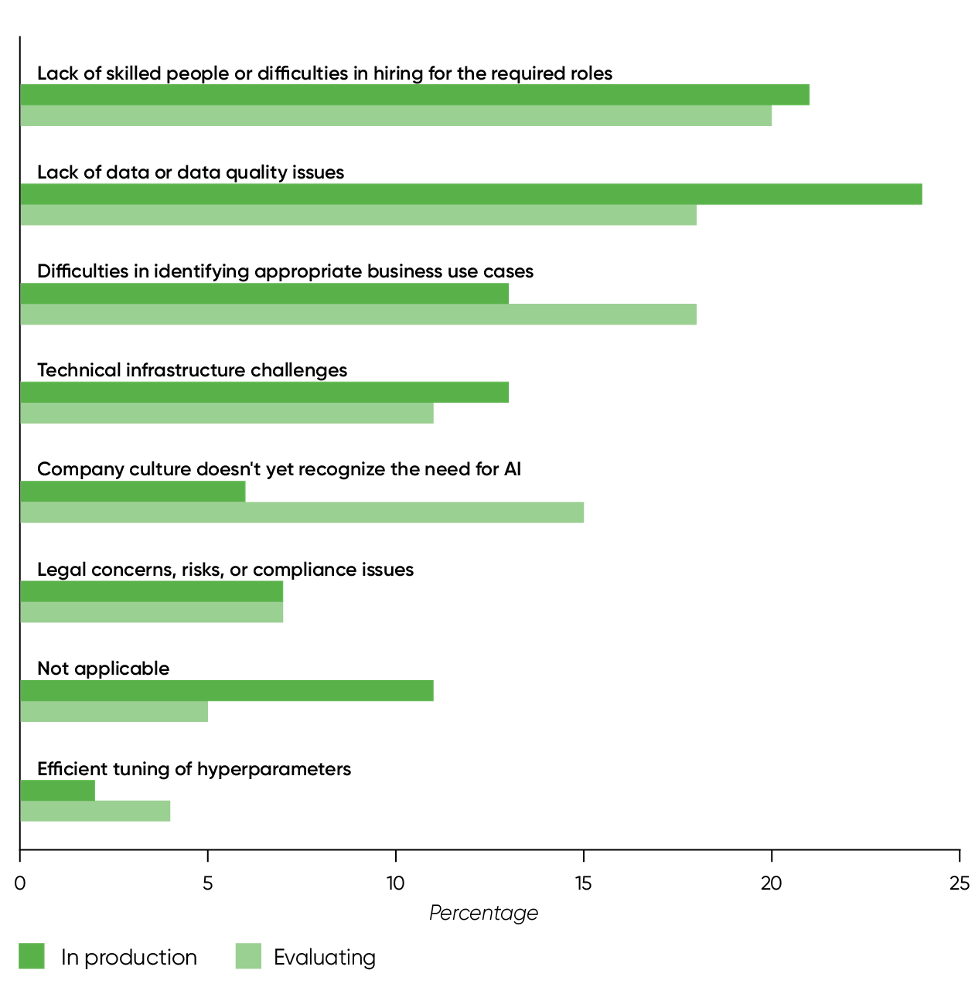

We asked respondents what the biggest bottlenecks were to AI adoption. The answers were strikingly similar to last year’s. Taken together, respondents with AI in production and respondents who were evaluating AI say the biggest bottlenecks were lack of skilled people and lack of data or data quality issues (both at 20%), followed by finding appropriate use cases (16%).

Looking at “in production” and “evaluating” practices separately gives a more nuanced picture. Respondents whose organizations were evaluating AI were much more likely to say that company culture is a bottleneck, a challenge that Andrew Ng addressed in a recent issue of his newsletter. They were also more likely to see problems in identifying appropriate use cases. That’s not surprising: if you have AI in production, you’ve at least partially overcome problems with company culture, and you’ve found at least some use cases for which AI is appropriate.

Respondents with AI in production were significantly more likely to point to lack of data or data quality as an issue. We suspect this is the result of hard-won experience. Data always looks much better before you’ve tried to work with it. When you get your hands dirty, you see where the problems are. Finding those problems, and learning how to deal with them, is an important step toward developing a truly mature AI practice. These respondents were somewhat more likely to see problems with technical infrastructure—and again, understanding the problem of building the infrastructure needed to put AI into production comes with experience.

Respondents who are using AI (the “evaluating” and “in production” groups—that is, everyone who didn’t identify themselves as a “non-user”) were in agreement on the lack of skilled people. A shortage of trained data scientists has been predicted for years. In last year’s survey of AI adoption, we noted that we’ve finally seen this shortage come to pass, and we expect it to become more acute. This group of respondents were also in agreement about legal concerns. Only 7% of the respondents in each group listed this as the most important bottleneck, but it’s on respondents’ minds.

And nobody’s worrying very much about hyperparameter tuning.

Looking a bit further into the difficulty of hiring for AI, we found that respondents with AI in production saw the most significant skills gaps in these areas: ML modeling and data science (45%), data engineering (43%), and maintaining a set of business use cases (40%). We can rephrase these skills as core AI development, building data pipelines, and product management. Product management for AI, in particular, is an important and still relatively new specialization that requires understanding the specific requirements of AI systems.

AI Governance

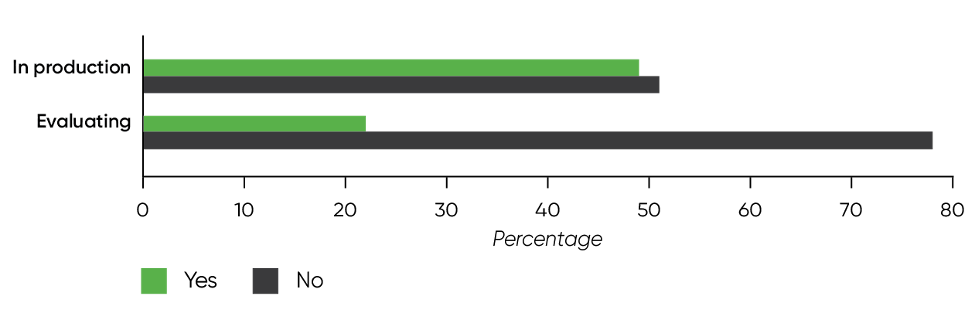

Among respondents with AI products in production, the number of those whose organizations had a governance plan in place to oversee how projects are created, measured, and observed was roughly the same as those that didn’t (49% yes, 51% no). Among respondents who were evaluating AI, relatively few (only 22%) had a governance plan.

The large number of organizations lacking AI governance is disturbing. While it’s easy to assume that AI governance isn’t necessary if you’re only doing some experiments and proof-of-concept projects, that’s dangerous. At some point, your proof-of-concept is likely to turn into an actual product, and then your governance efforts will be playing catch-up. It’s even more dangerous when you’re relying on AI applications in production. Without formalizing some kind of AI governance, you’re less likely to know when models are becoming stale, when results are biased, or when data has been collected improperly.

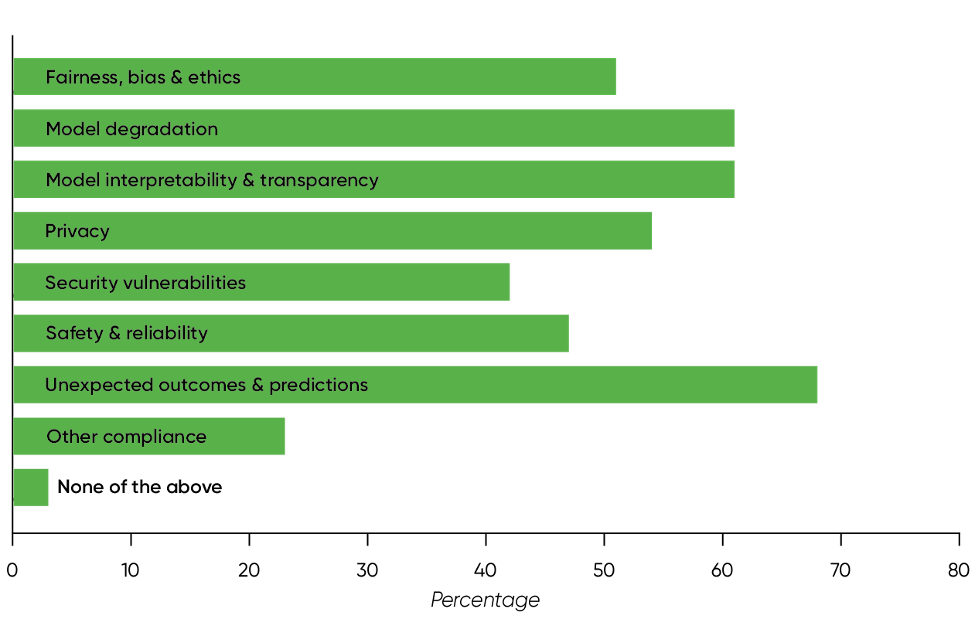

While we didn’t ask about AI governance in last year’s survey, and consequently can’t do year-over-year comparisons, we did ask respondents who had AI in production what risks they checked for. We saw almost no change. Some risks were up a percentage point or two and some were down, but the ordering remained the same. Unexpected outcomes remained the biggest risk (68%, down from 71%), followed closely by model interpretability and model degradation (both 61%). It’s worth noting that unexpected outcomes and model degradation are business issues. Interpretability, privacy (54%), fairness (51%), and safety (46%) are all human issues that may have a direct impact on individuals. While there may be AI applications where privacy and fairness aren’t issues (for example, an embedded system that decides whether the dishes in your dishwasher are clean), companies with AI practices clearly need to place a higher priority on the human impact of AI.

We’re also surprised to see that security remains close to the bottom of the list (42%, unchanged from last year). Security is finally being taken seriously by many businesses, just not for AI. Yet AI has many unique risks: data poisoning, malicious inputs that generate false predictions, reverse engineering models to expose private information, and many more among them. After last year’s many costly attacks against businesses and their data, there’s no excuse for being lax about cybersecurity. Unfortunately, it looks like AI practices are slow in catching up.

Governance and risk-awareness are certainly issues we’ll watch in the future. If companies developing AI systems don’t put some kind of governance in place, they are risking their businesses. AI will be controlling you, with unpredictable results—results that increasingly include damage to your reputation and large legal judgments. The least of these risks is that governance will be imposed by legislation, and those who haven’t been practicing AI governance will need to catch up.

Tools

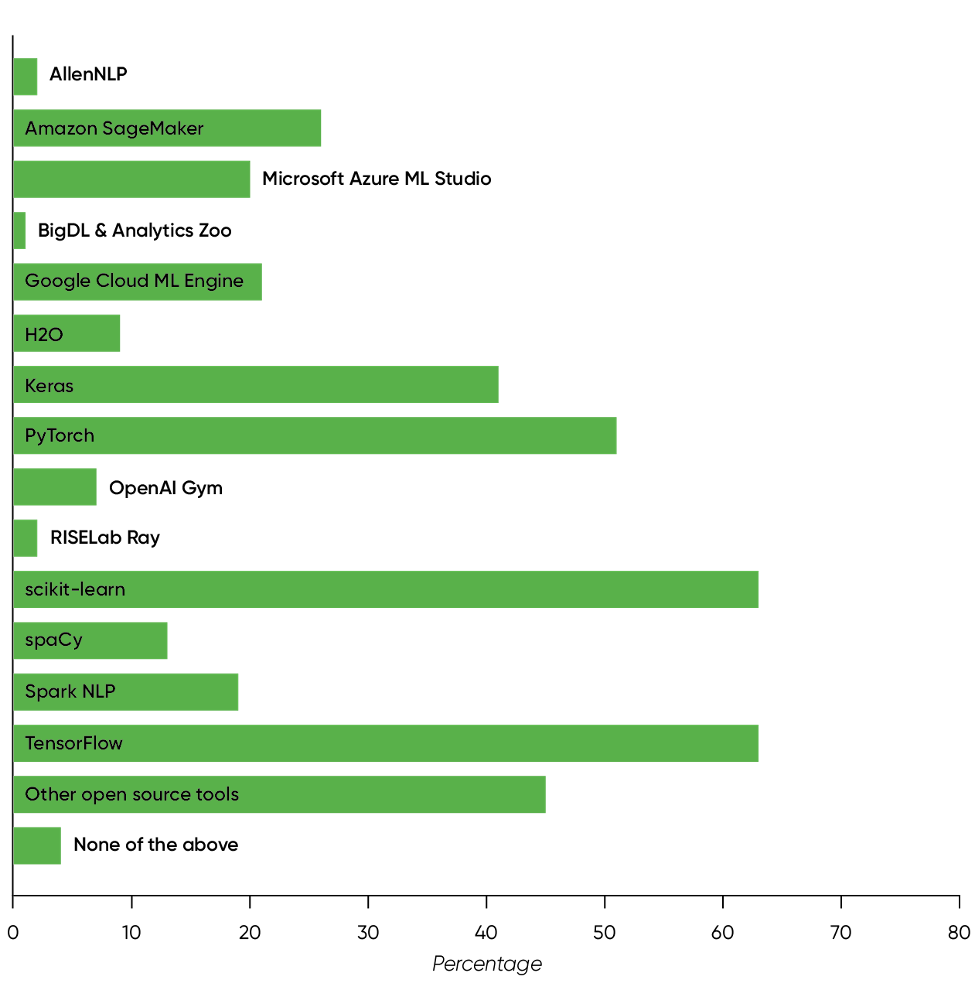

When we looked at the tools used by respondents working at companies with AI in production, our results were very similar to last year’s. TensorFlow and scikit-learn are the most widely used (both 63%), followed by PyTorch, Keras, and AWS SageMaker (50%, 40%, and 26%, respectively). All of these are within a few percentage points of last year’s numbers, typically a couple of percentage points lower. Respondents were allowed to select multiple entries; this year the average number of entries per respondent appeared to be lower, accounting for the drop in the percentages (though we’re unsure why respondents checked fewer entries).

There appears to be some consolidation in the tools marketplace. Although it’s great to root for the underdogs, the tools at the bottom of the list were also slightly down: AllenNLP (2.4%), BigDL (1.3%), and RISELab’s Ray (1.8%). Again, the shifts are small, but dropping by one percent when you’re only at 2% or 3% to start with could be significant—much more significant than scikit-learn’s drop from 65% to 63%. Or perhaps not; when you only have a 3% share of the respondents, small, random fluctuations can seem large.

Automating ML

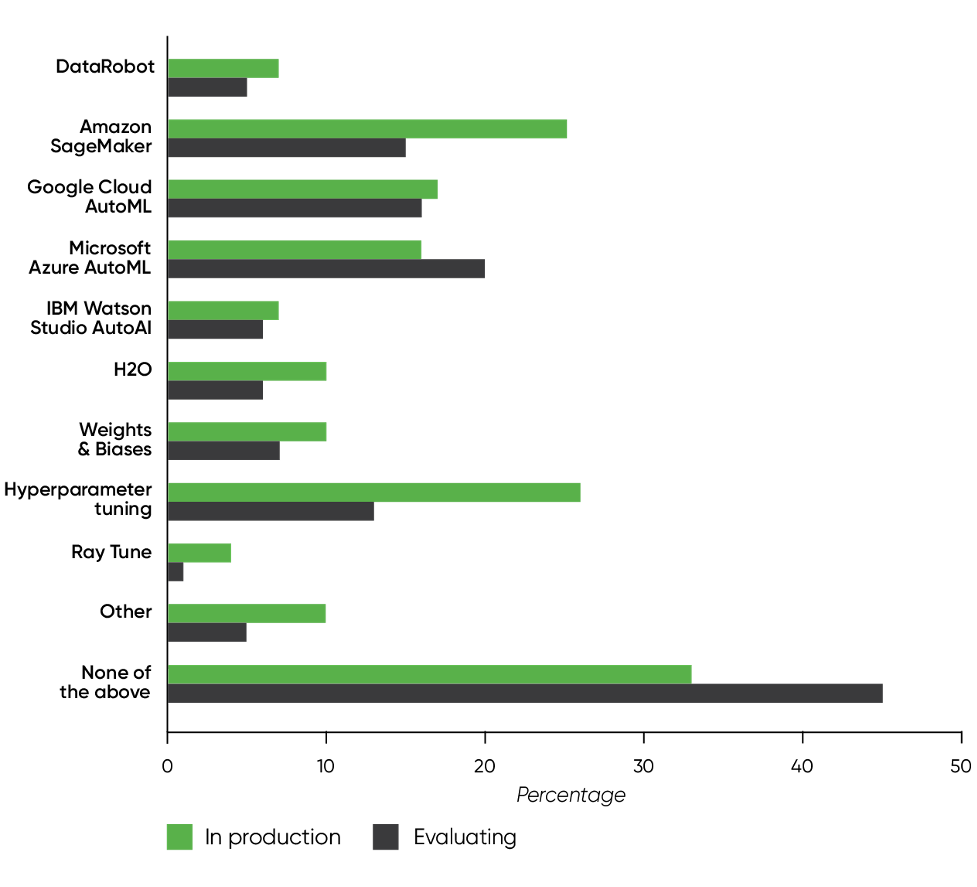

We took an additional look at tools for automatically generating models. These tools are commonly called “AutoML” (though that’s also a product name used by Google and Microsoft). They’ve been around for a few years; the company developing DataRobot, one of the oldest tools for automating machine learning, was founded in 2012. Although building models and programming aren’t the same thing, these tools are part of the “low code” movement. AutoML tools fill similar needs: allowing more people to work effectively with AI and eliminating the drudgery of doing hundreds (if not thousands) of experiments to tune a model.

Until now, the use of AutoML has been a relatively small part of the picture. This is one of the few areas where we see a significant difference between this year and last year. Last year 51% of the respondents with AI in production said they weren’t using AutoML tools. This year only 33% responded “None of the above” (and didn’t write in an alternate answer).

Respondents who were “evaluating” the use of AI appear to be less inclined to use AutoML tools (45% responded “None of the above”). However, there were some important exceptions. Respondents evaluating ML were more likely to use Azure AutoML than respondents with ML in production. This fits anecdotal reports that Microsoft Azure is the most popular cloud service for organizations that are just moving to the cloud. It’s also worth noting that the usage of Google Cloud AutoML and IBM AutoAI was similar for respondents who were evaluating AI and for those who had AI in production.

Deploying and Monitoring AI

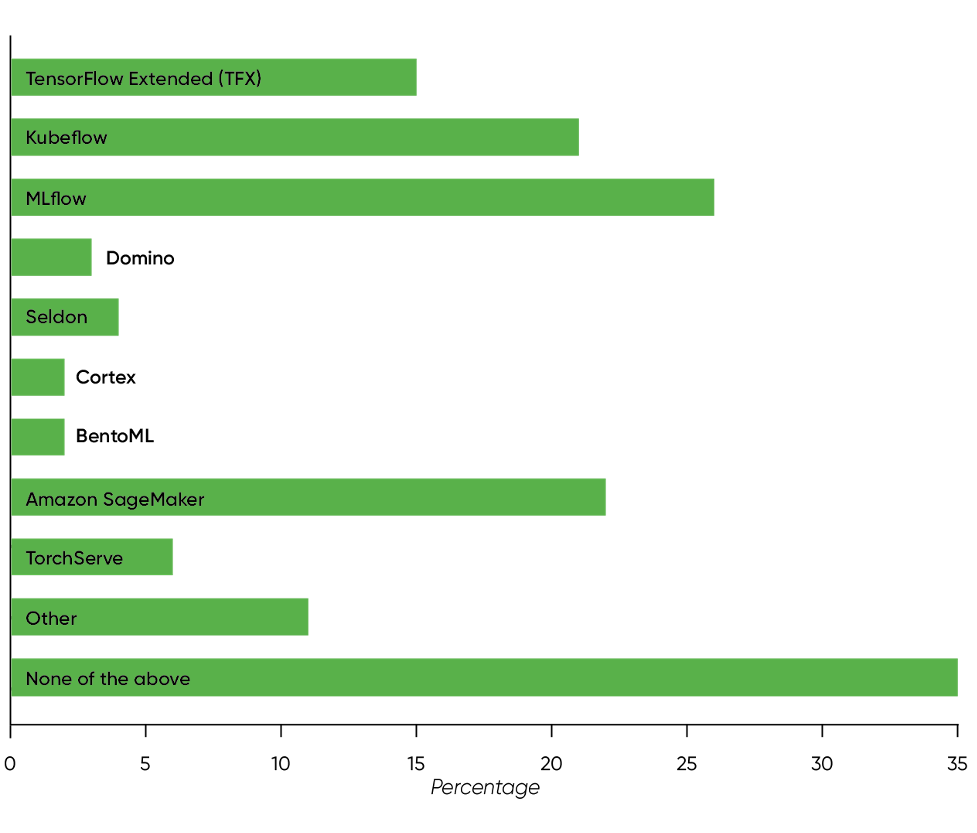

There also appeared to be an increase in the use of automated tools for deployment and monitoring among respondents with AI in production. “None of the above” was still the answer chosen by the largest percentage of respondents (35%), but it was down from 46% a year ago. The tools they were using were similar to last year’s: MLflow (26%), Kubeflow (21%), and TensorFlow Extended (TFX, 15%). Usage of MLflow and Kubeflow increased since 2021; TFX was down slightly. Amazon SageMaker (22%) and TorchServe (6%) were two new products with significant usage; SageMaker in particular is poised to become a market leader. We didn’t see meaningful year-over-year changes for Domino, Seldon, or Cortex, none of which had a significant market share among our respondents. (BentoML is new to our list.)

We saw similar results when we looked at automated tools for data versioning, model tuning, and experiment tracking. Again, we saw a significant reduction in the percentage of respondents who selected “None of the above,” though it was still the most common answer (40%, down from 51%). A significant number said they were using homegrown tools (24%, up from 21%). MLflow was the only tool we asked about that appeared to be winning the hearts and minds of our respondents, with 30% reporting that they used it. Everything else was under 10%. A healthy, competitive marketplace? Perhaps. There’s certainly a lot of room to grow, and we don’t believe that the problem of data and model versioning has been solved yet.

AI at a Crossroads

Now that we’ve looked at all the data, where is AI at the start of 2022, and where will it be a year from now? You could make a good argument that AI adoption has stalled. We don’t think that’s the case. Neither do venture capitalists; a study by the OECD, Venture Capital Investments in Artificial Intelligence, says that in 2020, 20% of all VC funds went to AI companies. We would bet that number is also unchanged in 2021. But what are we missing? Is enterprise AI stagnating?

Andrew Ng, in his newsletter The Batch, paints an optimistic picture. He points to Stanford’s AI Index Report for 2022, which says that private investment almost doubled between 2020 and 2021. He also points to the rise in regulation as evidence that AI is unavoidable: it’s an inevitable part of 21st century life. We agree that AI is everywhere, and in many places, it’s not even seen. As we’ve mentioned, businesses that are using third-party advertising services are almost certainly using AI, even if they never write a line of code. It’s embedded in the advertising application. Invisible AI—AI that has become part of the infrastructure—isn’t going away. In turn, that may mean that we’re thinking about AI deployment the wrong way. What’s important isn’t whether organizations have deployed AI on their own servers or on someone else’s. What we should really measure is whether organizations are using infrastructural AI that’s embedded in other systems that are provided as a service. AI as a service (including AI as part of another service) is an inevitable part of the future.

But not all AI is invisible; some is very visible. AI is being adopted in some ways that, until the past year, we’d have considered unimaginable. We’re all familiar with chatbots, and the idea that AI can give us better chatbots wasn’t a stretch. But GitHub’s Copilot was a shock: we didn’t expect AI to write software. We saw (and wrote about) the research leading up to Copilot but didn’t believe it would become a product so soon. What’s more shocking? We’ve heard that, for some programming languages, as much as 30% of new code is being suggested by the company’s AI programming tool Copilot. At first, many programmers thought that Copilot was no more than AI’s clever party trick. That’s clearly not the case. Copilot has become a useful tool in surprisingly little time, and with time, it will only get better.

Other applications of large language models—automated customer service, for example—are rolling out (our survey didn’t pay enough attention to them). It remains to be seen whether humans will feel any better about interacting with AI-driven customer service than they do with humans (or horrendously scripted bots). There’s an intriguing hint that AI systems are better at delivering bad news to humans. If we need to be told something we don’t want to hear, we’d prefer it come from a faceless machine.

We’re starting to see more adoption of automated tools for deployment, along with tools for data and model versioning. That’s a necessity; if AI is going to be deployed into production, you have to be able to deploy it effectively, and modern IT shops don’t look kindly on handcrafted artisanal processes.

There are many more places we expect to see AI deployed, both visible and invisible. Some of these applications are quite simple and low-tech. My four-year-old car displays the speed limit on the dashboard. There are any number of ways this could be done, but after some observation, it became clear that this was a simple computer vision application. (It would report incorrect speeds if a speed limit sign was defaced, and so on.) It’s probably not the fanciest neural network, but there’s no question we would have called this AI a few years ago. Where else? Thermostats, dishwashers, refrigerators, and other appliances? Smart refrigerators were a joke not long ago; now you can buy them.

We also see AI finding its way onto smaller and more limited devices. Cars and refrigerators have seemingly unlimited power and space to work with. But what about small devices like phones? Companies like Google have put a lot of effort into running AI directly on the phone, both doing work like voice recognition and text prediction and actually training models using techniques like federated learning—all without sending private data back to the mothership. Are companies that can’t afford to do AI research on Google’s scale benefiting from these developments? We don’t yet know. Probably not, but that could change in the next few years and would represent a big step forward in AI adoption.

On the other hand, while Ng is certainly right that demands to regulate AI are increasing, and those demands are probably a sign of AI’s ubiquity, they’re also a sign that the AI we’re getting is not the AI we want. We’re disappointed not to see more concern about ethics, fairness, transparency, and mitigating bias. If anything, interest in these areas has slipped slightly. When the biggest concern of AI developers is that their applications might give “unexpected results,” we’re not in a good place. If you only want expected results, you don’t need AI. (Yes, I’m being catty.) We’re concerned that only half of the respondents with AI in production report that AI governance is in place. And we’re horrified, frankly, not to see more concern about security. At least there hasn’t been a year-over-year decrease—but that’s a small consolation, given the events of last year.

AI is at a crossroads. We believe that AI will be a big part of our future. But will that be the future we want or the future we get because we didn’t pay attention to ethics, fairness, transparency, and mitigating bias? And will that future arrive in 5, 10, or 20 years? At the start of this report, we said that when AI was the darling of the technology press, it was enough to be interesting. Now it’s time for AI to get real, for AI practitioners to develop better ways to collaborate between AI and humans, to find ways to make work more rewarding and productive, to build tools that can get around the biases, stereotypes, and mythologies that plague human decision-making. Can AI succeed at that? If there’s another AI winter, it will be because people—real people, not virtual ones—don’t see AI generating real value that improves their lives. It will be because the world is rife with AI applications that they don’t trust. And if the AI community doesn’t take the steps needed to build trust and real human value, the temperature could get rather cold.

[ad_2]

Source link